Introduction

Did you know that PyTorch, one of the most popular open-source libraries for machine learning, came to life as a research project in Facebook's AI Research lab?

Its flexibility and speed have caught the attention of AI enthusiasts, established data scientists, and beginners alike. If you're eager to dip your toes into the vast ocean of MachineLearning and AI, understanding how to install and use PyTorch is a great starting point.

In this blog post, we're going to walk you through a step-by-step guide on installing PyTorch, simplifying the process for those who are just starting their journey.

We've broken down this technical exercise into digestible steps, so don't worry if you're a complete beginner or a Keras fan looking to explore new territories.

By the end of this guide, you'll have successfully installed PyTorch on your machine, with your first step into the realm of Machine Learning accomplished. Buckle up for this exciting journey into the world of AI with PyTorch.

What is a Deep Learning Framework?

At its core, a deep learning framework provides a scaffolding for building, training, and validating models grounded in deep learning — a subset of machine learning where neural networks emulate the way humans gain certain types of knowledge.

Deep learning frameworks like PyTorch, TensorFlow, and others offer pre-built components, making it easier to create sophisticated algorithms without starting from scratch.

Why Deep Learning Frameworks Matter?

Deep learning frameworks are crucial as they reduce the heavy lifting of coding complex algorithms by hand. They enable quick and more efficient development of scalable and robust deep learning models that can process and learn from large datasets, driving innovation in fields ranging from autonomous vehicles to medical diagnostics.

Understanding the Basics of Deep Learning

Deep learning hinges on the concept of neural networks with many layers, hence the 'deep'. The basic unit here is the 'neuron', and layers of these neurons form a network. Deep learning automates the critical task of feature extraction, eliminating the need for manual intervention and allowing models to learn directly from data.

Role of Deep Learning in AI

Deep learning propels AI capabilities to new heights. Its role in AI is to model high-level abstractions in data, which allows AI systems to make decisions with minimal human intervention. From speech recognition to natural language processing, deep learning is at the forefront of creating intelligent systems that solve complex problems.

Popular Deep Learning Frameworks

There's a multitude of frameworks available catering to different needs — TensorFlow, Keras, PyTorch, and Caffe, to name a few. TensorFlow is renowned for its scalability and production readiness, while Keras appeals with its simplicity. On the other hand, PyTorch is known for its ease of use and dynamic computation graphing.

A Close Look at PyTorch

PyTorch is an open-source machine learning library extensively used for applications such as computer vision and natural language processing. It's known for its dynamic computation graph, as well as its efficient memory usage and ease of optimization, which have made it a go-to among both academia and industry professionals.

Origin and Developers behind PyTorch

PyTorch was developed by the Artificial Intelligence Research group at Facebook and benefitted from contributions from various prolific entities in the tech world. The framework is continually updated and enhanced by a large community of open-source developers, as evidenced by the activity on the PyTorch GitHub repository.

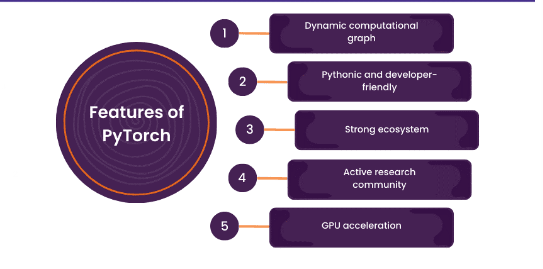

Features of PyTorch

One of PyTorch's most applauded features is its dynamic computation graph, which allows changes to be made on the fly. Its API is straightforward, fostering rapid development and prototyping.

Plus, PyTorch's tutorials and documentation support a wide learning curve, facilitating ease of PyTorch install and adoption.

Who uses PyTorch?

From startups to large tech enterprises, many have chosen PyTorch for research and development. It’s beloved in academic circles for its simplicity and ease of use, which makes it perfect for teaching, while its robustness and scalability make it equally suitable for industrial applications.

The PyTorch Ecosystem

Not only is PyTorch a standalone framework, but it's also part of a larger ecosystem that includes libraries like torchvision, torchaudio, and text for advanced operations in their respective domains. Moreover, the ease of pip install PyTorch contributes to its rapidly growing user base.

PyTorch vs. Other Deep Learning Frameworks

Choosing the right deep learning framework can be pivotal for the success of a project. This section will help elucidate how PyTorch compares with other stalwarts in the domain, examining not just raw performance but also other factors like ease of use, community support, and versatility.

PyTorch vs TensorFlow

The rivalry between PyTorch and TensorFlow is well noted. While TensorFlow excels in deploying models in production and scalability, PyTorch is often lauded for its user-friendly interface and dynamic computation graph that offers more flexibility in model design and experimentation.

PyTorch vs Keras

Keras is an API designed for human beings, not machines, which makes it exceedingly easy to build neural networks. However, PyTorch provides more granularity and control, which can be imperative for certain complex projects.

PyTorch vs Caffe

Caffe is recognized for its speed in training models and is great for projects where the model architecture does not change frequently. PyTorch, with its dynamic nature, is better suited when models are experimental or research-inclined.

Understanding the Unique Advantages of PyTorch

One of PyTorch’s unique aspects is its imperative programming model, which aligns with Python’s programming style. This native congruence with Python allows for easier debugging and a more intuitive handling of operations.

Choosing the Right Framework for Your Project

The decision between PyTorch and its peers boils down to the specific requirements of the project, expertise of the team, and long-term goals. PyTorch is an excellent choice for projects that prioritize development speed, research, and experimentation over immediate production deployment.

Getting Started with PyTorch

Before embarking on any PyTorch project, you must set up a conducive environment for it. Understand the prerequisites for a PyTorch install and ensure your system meets the necessary specifications to facilitate smooth running of heavy computational tasks.

Installing PyTorch in Various Operating Systems

Whether you're a Windows, MacOS or Linux user, installing PyTorch is a straightforward process. Discover the methods like pip install PyTorch, and learn how to troubleshoot common installation pitfalls. Remember to verify your installation before you proceed.

Understanding PyTorch Basics: Tensors and Autograd

PyTorch's building blocks are its tensors and the Autograd system. Grasp how these multidimensional arrays operate and how PyTorch leverages them for computing gradients automatically — a backbone feature for neural network training.

Loading Data in PyTorch

Data is the lifeblood of machine learning. PyTorch provides efficient tools for data loading, transforming and batching, which are indispensable for training models. It's here that you'll familiarize yourself with DataLoader and Dataset classes.

Building your First Neural Network with PyTorch

Ready to build your first PyTorch model? We'll walk you through initializing a neural network, choosing loss functions and optimizers, and setting up the training loop — your first iteration towards becoming a PyTorch expert.

Certainly! To make the information more complete and useful, we will add details to each section presented:

Diving into PyTorch Code

PyTorch's syntax is intuitive and Pythonic, making the transition smooth for new learners or those migrating from other frameworks like TensorFlow. The dynamic computation graph and eager execution mode of PyTorch enable a flexible and interactive approach to model development.

Key Concepts You'll Learn:

- Define and manipulate tensors, which are the building blocks of PyTorch.

- Utilize auto differentiation with torch.autograd for automatic computation of gradients.

- Integrate with NumPy and other libraries seamlessly.

Exploring PyTorch Libraries and Tools

PyTorch has a rich ecosystem, complete with libraries that cater to various needs in the data science workflow.

Essential Libraries:

- torchvision: for image processing and common datasets.

- torchtext: for natural language processing tasks.

- torchaudio: for audio data handling.

- Explore community-contributed libraries like fastai and Hugging Face Transformers.

Creating and Training Models

PyTorch makes it simple to construct models with its nn.Module. You can define custom layers, initialize parameters, and string together the components of your neural network in an organized manner.

Skills You'll Acquire:

- Define custom neural network architectures using nn.Module.

- Implement forward and backward propagation.

- Utilize loss functions and optimizers from torch.nn and torch.optim.

Evaluating Model Performance

Developing accurate models also means evaluating their performance meticulously. PyTorch supports a variety of evaluation metrics and methods to validate model effectiveness.

You'll Learn How to:

- Split data into training and validation sets for robust evaluation.

- Use metrics such as accuracy, precision, recall, and F1 score.

- Implement confusion matrices and ROC curves using sklearn integration for a deeper analysis.

Saving and Loading Models

Mastering the process of saving and loading model checkpoints is crucial for long training sessions, iterative improvements, and deployment scenarios.

Takeaways Include:

- Save and load entire models (model.state_dict) or just the parameters.

- Use torch.save and torch.load for checkpoint management.

- Understand how to resume training or perform inference using saved models.

By enhancing each section with these details, we ensure a more complete presentation that guides the learner through the key concepts of PyTorch, from its core syntax to the sophisticated management of model growth and deployment.

Advanced PyTorch Concepts

As our datasets expand and models become increasingly complex, the need for distributed training becomes inevitable. PyTorch comes equipped with scalable solutions to perform model training in parallel across multiple GPUs and even across machines, effectively shortening the training time without compromising the model's performance.

How to Implement Distributed Training:

PyTorch provides the torch.nn.DataParallel and torch.nn.parallel.DistributedDataParallel modules to distribute computations across different nodes. These modules split the data across different GPUs, process them in parallel, and combine the results. Setting up distributed training involves dividing your dataset across nodes, initializing the process group with torch.distributed.init_process_group, and wrapping your model with the aforementioned PyTorch distributed training wrappers.

Using PyTorch with CUDA Support

In the realm of machine learning, speed and efficiency during model training are paramount. CUDA, a parallel computing platform and programming model developed by Nvidia, allows developers to significantly speed up computing operations by leveraging GPUs.

How to Enable CUDA Support:

Activating CUDA in PyTorch is straightforward. First, ensure your hardware and drivers support CUDA. Then, install the CUDA-enabled version of PyTorch from the official website. In your Python code, you can direct PyTorch to use CUDA with .to('cuda') for model and data, which offloads heavy computations to the GPU, leading to much faster training times.

PyTorch's Dynamic Computation Graphs

Unlike other frameworks that use a static computation graph, PyTorch uses dynamic computation graphs, also known as the "define-by-run" approach. This gives developers the flexibility to change the network architecture dynamically, providing a significant edge when working with variable input lengths or when experimenting with innovative model structures.

How it Works:

In PyTorch, the computation graph is built as operations occur. This means each forward pass can define a new computational graph, allowing for dynamic modifications to the network architecture as dictated by the logic of your code. This is especially useful in complex models such as recursive neural networks or when developing new, experimental model architectures.

PyTorch's Production Ecosystem

Transitioning a model from research to production is a nuanced process that involves different tools and practices. PyTorch simplifies this transition with TorchScript, a tool that converts PyTorch models to a serialized form that can run within a C++ runtime environment, without requiring Python.

How to Use TorchScript for Model Deployment:

To prepare a model for deployment, first, annotate your PyTorch model functions with the @torch.jit.script decorator or use torch.jit.trace on your model with sample inputs. This conversion process produces a TorchScript model that retains the original model's properties but is now optimized and can be deployed in production environments without a Python dependency.

Using TorchScript for Model Deployment

Deployment is a critical phase, marking a model's transition from a research project to a component in a functional application stack. PyTorch ensures this process is as smooth as possible with TorchScript, empowering researchers and engineers to deploy models efficiently into production environments that require high performance and reliability.

How to Transition a Model to Deployment:

After converting your model into TorchScript, you can deploy it in a diverse range of environments, from server backends using libraries like TorchServe to mobile devices using PyTorch Mobile. The converted models are optimized for performance, can be easily scaled, and are agnostic of the original Python code, making them versatile for various deployment scenarios.

Exploring the PyTorch Community and Resources

The potency of a framework isn't just determined by code and features. The true strength lies in its community – the humans behind the code. The community is both Torchbearer and custodian, breathing life into the framework by contributing, resolving doubts, aiding new users, and much more.

Learning from PyTorch Tutorials and Docs

An essential way to comprehend PyTorch is through its comprehensive tutorials and documentation. New users can follow the guides to install pytorch, try sample models, solve problems, and more. Each pytorch tutorial is an entryway to master the features this deep learning framework has to offer.

Participating in PyTorch Discussion Forums

The best way to learn is to engage. That's where PyTorch discussion forums fit in. Participating in forums helps you learn from experienced users and can be a platform to share your knowledge too. It's like having an omnipresent study group that's forever ready to help.

Contributions to PyTorch: How to Get Involved?

The beauty of open-source lies in contribution. Be it detailing a feature, enhancing documentation, or writing an entire module, every contribution enriches PyTorch. Visit the pytorch github page to contribute and help make PyTorch better for everyone.

Noteworthy Projects and Procedures in the PyTorch Ecosystem

The PyTorch ecosystem thrives with lively applications across domains. Explore the novel uses and creative applications leveraging the dynamism of pytorch model capabilities and the seamless integration it allows for varying use-cases.

Real-world Applications of PyTorch

PyTorch, with its dynamic computation graph and robust community support, offers a flexible and intuitive framework for machine learning. Its adoption crosses various industries, underlining its capability to address unique challenges in innovative ways.

Case Studies: Companies Using PyTorch

1. Facebook: As the creator of PyTorch, Facebook utilizes the framework extensively for its AI and machine learning projects, including computer vision tasks for content filtering and deep learning models for recommendation systems.

2. Tesla: Tesla uses PyTorch for its autopilot functionalities. The framework's ability to rapidly prototype and scale allows Tesla to innovate quickly in the autonomous vehicle space.

3. OpenAI: Known for its cutting-edge research in AI, OpenAI employs PyTorch for a variety of projects. Their famed GPT models, which have set new standards in natural language processing (NLP), were developed using PyTorch.

Insights: These case studies reveal how PyTorch's flexibility in model development and its capacity for seamless scaling make it a preferred choice for companies working on the frontier of AI research and application.

Academic Research with PyTorch

PyTorch has become a favorite in academia due to its ease of use and dynamic nature. It's particularly popular for AI research, where the need to experiment rapidly with models is paramount.

Example: Researchers at MIT have utilized PyTorch for projects ranging from computer vision systems that interpret medical imaging to natural language processing tasks that decipher ancient scripts.

Importance: For researchers and educators, PyTorch lowers the barrier to entry in AI research, enabling a focus on innovation rather than wrestling with the intricacies of the framework.

Innovative Projects Done with PyTorch

1. Deep Learning for Earthquake Prediction: Scientists have used PyTorch to build models that learn from seismic data, aiming to predict earthquakes with higher accuracy than traditional methods.

2. Real-time Language Translation: Leveraging PyTorch's capabilities, developers have created applications that offer real-time translation of spoken language, breaking down communication barriers across the globe.

Impact: These innovative projects showcase PyTorch's ability to handle diverse and complex datasets, providing solutions to some of the world's most pressing issues.

Future Trends in PyTorch Usage

1. AI Democratization: PyTorch continues to play a pivotal role in democratizing AI, making advanced machine learning techniques accessible to a broader audience.

2. Advancements in NLP and Computer Vision: As PyTorch evolves, it's expected to drive significant breakthroughs in NLP and computer vision, areas critical to advancing human-computer interaction.

3. Cross-industry Adoption: From healthcare to automotive, PyTorch's versatility and ease of use encourage its adoption across various industries, promising innovative solutions to industry-specific challenges.

Predictions: Industry experts anticipate that PyTorch will remain at the forefront of AI and deep learning, propelled by its strong community, constant innovation, and the ever-growing need for more intuitive and powerful AI solutions.

Conclusion: The Future of PyTorch and Deep Learning

PyTorch isn't static; it's continually evolving with new features and advancements. Let's examine some of the latest upgrades that make installing pytorch a worthwhile venture for deep learning practitioners.

What Makes PyTorch a Sustainable Framework?

With a sturdy design, rich features, and a vibrant community, PyTorch exhibits promise for sustainability. It's a workhorse capable of delivering results consistently across different domains and scales, justifying the minor effort it takes to pip install pytorch and get started.

The Role of PyTorch in the Future of Deep Learning

The future of deep learning is exciting, and PyTorch is expected to be an integral part of it. Promising flexibility and easy manipulation of deep neural networks, it's well-positioned for next-gen AI applications.

Transitioning from a PyTorch Beginner to an Expert

While PyTorch is beginner-friendly, mastering it requires dedication and practice. However, with a wealth of resources and an active community, that journey from newbie to expert can be as engaging as it is rewarding.

Closing Remarks & Encouragement to Learn More

In our expedition into PyTorch, we've unearthed many fascinating aspects. But remember, each pytorch tutorial or forum post is a gateway to further learning. Keep exploring, keep learning, and unravel the endless opportunitites the universe of PyTorch presents.

Frequently Asked Questions (FAQs)

How Does PyTorch Differ from Other Deep Learning Frameworks?

Unlike others, PyTorch allows for dynamic computation graphs, providing flexibility in building complex architectural models. It has an intuitive interface, making it beginner-friendly and easier for debugging.

What Are Tensors in PyTorch?

Tensors in PyTorch are similar to NumPy’s ndarrays, with the addition of strong GPU acceleration. They are a key data structure in PyTorch, used to define and compute gradients.

Why Is PyTorch Preferred for Research Purposes?

Because of its dynamic computation graphs and simple design, PyTorch is preferred for research. It allows for easier prototyping and experimenting, offering a higher level of flexibility and speed during implementation.

What’s the Use of PyTorch's Autograd Feature?

Autograd is one of PyTorch's most powerful tools. It automatically calculates and backpropagates gradients — great for building computational graphs and implementing deep neural networks.

Can PyTorch Be Used for Production Deployment?

Yes, PyTorch offers a robust platform for deploying machine learning models through PyTorch's JIT compiler, which allows for optimal performance in C++ runtime environments.